AI

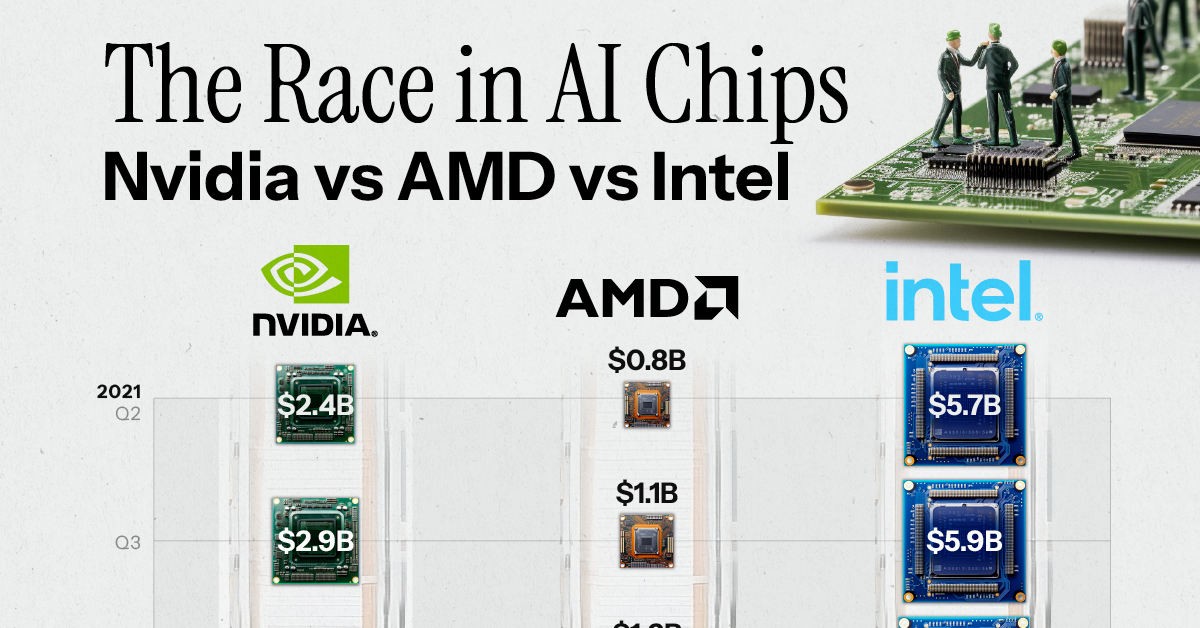

Nvidia vs. AMD vs. Intel: Comparing AI Chip Sales

Nvidia vs. AMD vs. Intel: Comparing AI Chip Sales

Nvidia has become an early winner of the generative AI boom.

The company reported record revenue in its second quarter earnings report, with sales of AI chips playing a large role. If we compare to other American competitors, what do the AI chip sales of Nvidia vs. AMD vs. Intel look like?

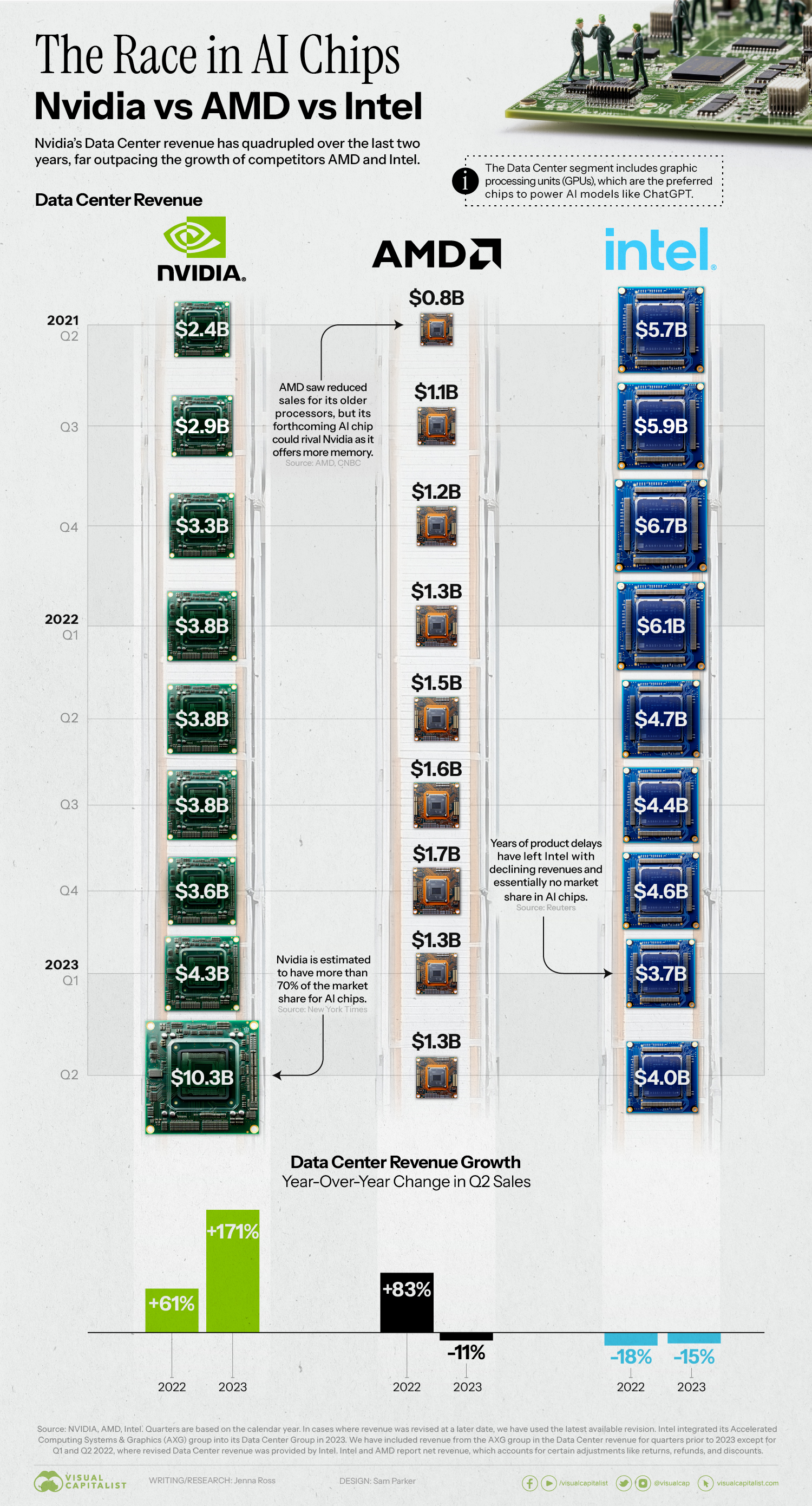

In this graphic, we use earnings reports from each company to see their revenue over time.

A Clear Leader Emerges

While the companies don’t report revenue for their AI chips specifically, they do share revenue for their Data Center segment.

The Data Center segment includes chips like Central Processing Units (CPUs), Data Processing Units (DPUs), and Graphic Processing Units (GPUs). The latter are preferred for AI because they can perform many simple tasks simultaneously and efficiently.

Below, we show how quarterly Data Center revenue has grown for Nvidia vs. AMD vs. Intel.

| Nvidia | AMD | Intel | |

|---|---|---|---|

| Q2 2021 | $2.4B | $0.8B | $5.7B |

| Q3 2021 | $2.9B | $1.1B | $5.9B |

| Q4 2021 | $3.3B | $1.2B | $6.7B |

| Q1 2022 | $3.8B | $1.3B | $6.1B |

| Q2 2022 | $3.8B | $1.5B | $4.7B |

| Q3 2022 | $3.8B | $1.6B | $4.4B |

| Q4 2022 | $3.6B | $1.7B | $4.6B |

| Q1 2023 | $4.3B | $1.3B | $3.7B |

| Q2 2023 | $10.3B | $1.3B | $4.0B |

Source: Nvidia, AMD, Intel. Quarters are based on the calendar year. In cases where revenue was revised at a later date, we have used the latest available revision. Intel integrated its Accelerated Computing Systems & Graphics (AXG) group into its Data Center Group in 2023. We have included revenue from the AXG group in the Data Center revenue for quarters prior to 2023 except for Q1 and Q2 2022, where revised Data Center revenue was provided by Intel.

Nvidia’s Data Center revenue has quadrupled over the last two years, and it’s estimated to have more than 70% of the market share for AI chips.

The company achieved dominance by recognizing the AI trend early, becoming a one-stop shop offering chips, software, and access to specialized computers. After hitting a $1 trillion market cap earlier in 2023, the stock continues to soar.

Competition Between Nvidia vs. AMD vs. Intel

If we compare Nvidia vs. AMD, the latter company has seen slower growth and less revenue. Its MI250 chip was found to be 80% as fast as Nvidia’s A100 chip.

However, AMD has recently put a focus on AI, announcing a new MI300X chip with 192GB of memory compared to the 141GB that Nvidia’s new GH200 offers. More memory reduces the amount of GPUs needed, and could make AMD a stronger contender in the space.

In contrast, Intel has seen yearly revenue declines and has virtually no market share in AI chips. The company is better known for making traditional CPUs, and its foray into the AI space has been fraught with issues. Its Sapphire Rapids processor faced years of delays due to a complex design and numerous glitches.

Going forward, all three companies have indicated they plan to increase their AI offerings. It’s not hard to see why: ChatGPT reportedly runs on 10,000 Nvidia A100 chips, which would carry a total price tag of around $100 million dollars.

As more AI models are developed, the infrastructure that powers them will be a huge revenue opportunity.

Technology

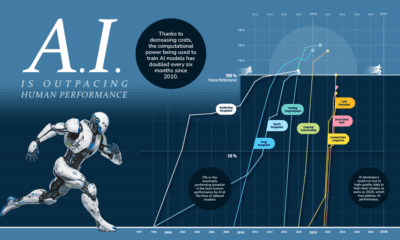

AI vs. Humans: Which Performs Certain Skills Better?

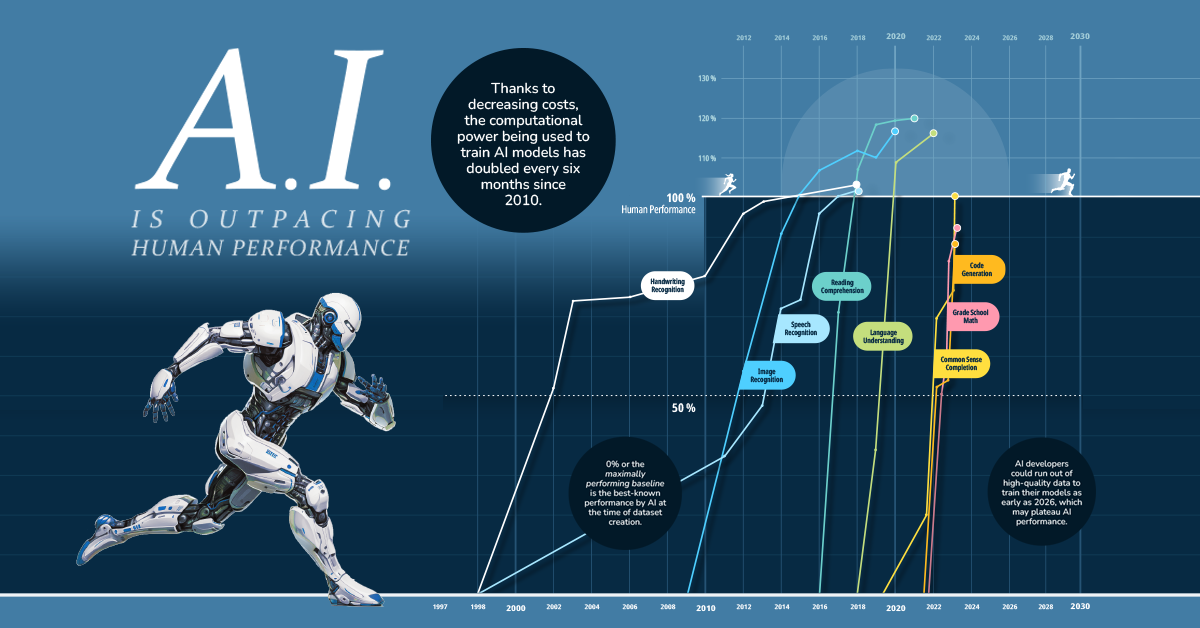

Progress in computation ability, data availability, and algorithm efficiency has led to rapid gains in performance for AI vs humans.

AI vs. Humans: Which Performs Certain Skills Better?

With ChatGPT’s explosive rise, AI has been making its presence felt for the masses, especially in traditional bastions of human capabilities—reading comprehension, speech recognition and image identification.

In fact, in the chart above it’s clear that AI has surpassed human performance in quite a few areas, and looks set to overtake humans elsewhere.

How Performance Gets Tested

Using data from Contextual AI, we visualize how quickly AI models have started to beat database benchmarks, as well as whether or not they’ve yet reached human levels of skill.

Each database is devised around a certain skill, like handwriting recognition, language understanding, or reading comprehension, while each percentage score contrasts with the following benchmarks:

- 0% or “maximally performing baseline”

This is equal to the best-known performance by AI at the time of dataset creation. - 100%

This mark is equal to human performance on the dataset.

By creating a scale between these two points, the progress of AI models on each dataset could be tracked. Each point on a line signifies a best result and as the line trends upwards, AI models get closer and closer to matching human performance.

Below is a table of when AI started matching human performance across all eight skills:

| Skill | Matched Human Performance | Database Used |

|---|---|---|

| Handwriting Recognition | 2018 | MNIST |

| Speech Recognition | 2017 | Switchboard |

| Image Recognition | 2015 | ImageNet |

| Reading Comprehension | 2018 | SQuAD 1.1, 2.0 |

| Language Understanding | 2020 | GLUE |

| Common Sense Completion | 2023 | HellaSwag |

| Grade School Math | N/A | GSK8k |

| Code Generation | N/A | HumanEval |

A key observation from the chart is how much progress has been made since 2010. In fact many of these databases—like SQuAD, GLUE, and HellaSwag—didn’t exist before 2015.

In response to benchmarks being rendered obsolete, some of the newer databases are constantly being updated with new and relevant data points. This is why AI models technically haven’t matched human performance in some areas (grade school math and code generation) yet—though they are well on their way.

What’s Led to AI Outperforming Humans?

But what has led to such speedy growth in AI’s abilities in the last few years?

Thanks to revolutions in computing power, data availability, and better algorithms, AI models are faster, have bigger datasets to learn from, and are optimized for efficiency compared to even a decade ago.

This is why headlines routinely talk about AI language models matching or beating human performance on standardized tests. In fact, a key problem for AI developers is that their models keep beating benchmark databases devised to test them, but still somehow fail real world tests.

Since further computing and algorithmic gains are expected in the next few years, this rapid progress is likely to continue. However, the next potential bottleneck to AI’s progress might not be AI itself, but a lack of data for models to train on.

-

VC+2 weeks ago

VC+2 weeks agoLifetime VC+ Subscription – One-time Offer Expires Aug 24, 2023

-

Markets4 days ago

Markets4 days agoVisualized: U.S. Corporate Bankruptcies On the Rise

-

Green2 weeks ago

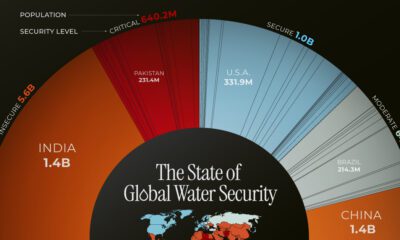

Green2 weeks agoVisualizing the Global Population by Water Security Levels

-

Economy3 days ago

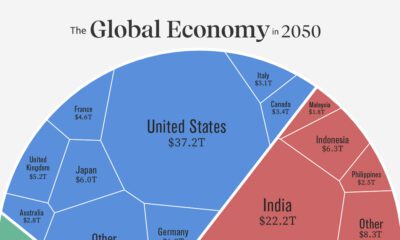

Economy3 days agoVisualizing the Future Global Economy by GDP in 2050

-

Markets4 weeks ago

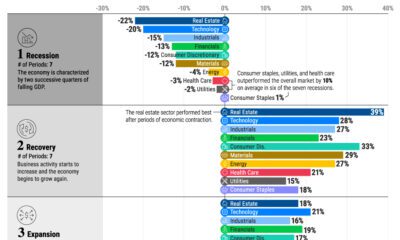

Markets4 weeks agoThe Top Performing S&P 500 Sectors Over the Business Cycle

-

Education2 weeks ago

Education2 weeks ago12 Different Ways to Organize the Periodic Table of Elements

-

Maps1 day ago

Maps1 day agoVisualizing the BRICS Expansion in 4 Charts

-

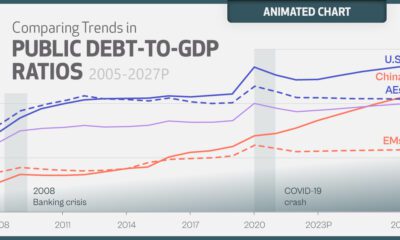

Debt4 weeks ago

Debt4 weeks agoAnimated: Global Debt Projections (2005-2027P)